By Josh Hacker, Chief Science Officer, Jupiter Intelligence

The Imperative for Downscaling in Climate Risk Analysis

As the impacts of climate change continue to manifest in myriad ways, some businesses are turning to global climate models (GCMs) for physical climate risk data and analytics. But there is growing evidence that GCM data, if used directly, can lead to inaccurate risk analysis and, consequently, flawed business decisions.

GCMs, while integral to understanding global climate patterns, are inherently limited in their granularity. These models are unable to simulate extreme weather events accurately due to their lack of spatial and temporal detail. Furthermore, GCMs have inherent biases, which can vary based on location, time of day, and weather extremities, i.e., where in the range of variability a parameter lies (warm or cold).

Not all climate risk data is created equal. And that inequality can be significant.

There is great potential for misleading results when relying on GCM data for financial impact, asset, portfolio, or company valuation. “At these scales, and in the context of material extremes associated with climate-induced weather-scale phenomenon, the ways in which the Network for Greening the Financial System (NGFS)1 methodology are being employed is very likely misleading.”2 This suggests that for effective physical climate risk data and analytics, businesses must augment GCM output with additional information that powers bottom-up risk analyses.

A crucial step in making climate science useful for business decision-making is to 'downscale' the GCM output, adding spatial and temporal granularity to project damaging extremes like tropical cyclones, local windstorms, and rainfall leading to pluvial and fluvial flooding. However, today's climate risk assessments often rely on coarse data, such as a single value representing a hazard for an entire nation or state. Or, the use of GCM data directly at scales of approximately 100-125 km. Those data can only inform top-down analyses, which attempt to project asset-level risks based on large-scale average data – and are known to be inaccurate and often misleading. The Earth system delivers impacts at much smaller scales. This discrepancy has serious consequences for businesses, leading to a strong case for the application of more granular data in physical climate risk analytics. A bottom-up analysis is needed; where risks at the asset level are aggregated up to the portfolio level while preserving the effects of highly variable risks within the portfolio.

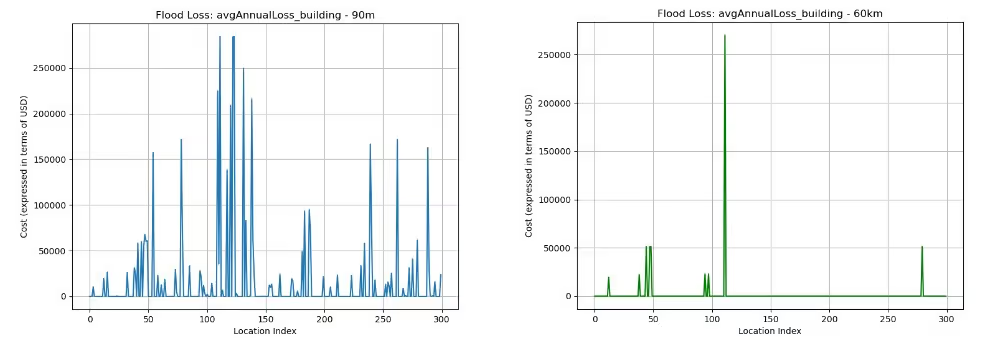

Many risk professionals may think coarse data is good enough to serve the purpose of solving simple problems. Especially since it is generally available at a low cost. However, most people outside of the climate science community don’t realize just how much misleading information can be in their resilience planning due to the use of coarse data. A commonly used source of data for physical risk and stress testing is mostly at 60 km spacing. Below we show the consequences of using data at that granularity.

Dealing with the uncertainty that coarse data introduces may cost you more than the data itself.

Take, for example, the implications of coarse data for flood risk analysis. When examining a portfolio of 300 buildings in the southern United Kingdom, the use of coarse data underestimates the average annual loss (AAL) due to flood damage significantly compared to high-resolution data. The high-resolution data indicates a portfolio-wide loss of around $4.7 million, whereas the coarse data projects a mere $570 thousand.

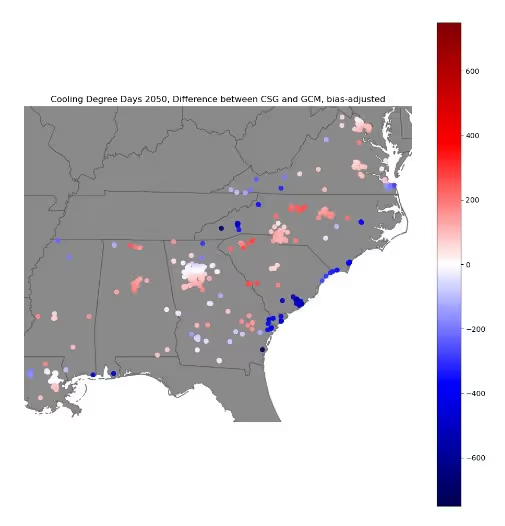

Beyond flood risks, the granularity of data also impacts other climate-related risks like heat. When assessing energy consumption, cooling degree days (CDD) is a common parameter used. However, using GCM-scale data can lead to a significant overestimation of CDD in coastal zones and high-elevation areas. In turn, this miscalculation could lead to systemic deficiencies in estimating electricity use for an arbitrary portfolio of assets.

Businesses should not underestimate the power of granular data for effective climate risk assessment. High-resolution data, accounting for the fine-scale details of the natural and built terrain, including elevation, slope, imperviousness, and barriers, leads to more accurate risk predictions. The more granularity in the models, the more flexible the data derived from those models. This shows up in a business context as the availability of the exact metric needed, as opposed to the “next best” metric which may introduce additional uncertainty or need further manipulation.

As climate change continues to impact businesses worldwide, it is crucial to adopt a more refined approach to climate risk data and analytics. While GCMs provide an essential understanding of global climate trends, their limitations necessitate a more nuanced approach. By downscaling GCMs to incorporate granular data, businesses can better understand the risks associated with climate change and make more informed decisions to mitigate its impacts.

Downscaling GCM data is more than just a method; it's a necessary adaptation in the face of an evolving climate. It's time for businesses to recognize the fundamental nature of granularity for effective climate risk analysis.

________________________________________________________________________________________

1Network of Central Banks and Supervisors for Greening the Financial System. The guidance from the NGFS is similar in spirit and approach to others, such as the Bank of England, and grounded in the Task Force for Climate-Related Financial Disclosure (TCFD) framework.

2Pitman, A. J., T. Fiedler, N. Ranger, C. Jakob, N. Ridder, S. Perkins-Kirkpatrick, N. Wood, and G. Abramowitz. 2022. “Acute Climate Risks in the Financial System: Examining the Utility of Climate Model Projections.” Environmental Research: Climate 1 (2): 025002.

.webp)